Open Media day @ FOSDEM’16

You might have wondered if I disappeared from Earth for the last 16 months. Well, not exactly. I was quite busy writing articles for Streaming Media, which you probably read, and I hope you found useful insights in it. Today I’m officially reopening the Eltrovemo blog space after this long editorial break – and it’s starting straight with a teaser: my next Streaming Media article after the “State of DASH 2016” will be about the role of Open Source in the video ecosystem. A major role, powered by dedicated people deserving our respect and gratitude. Here is an appetitizer 🙂

For those who don’t know it, FOSDEM is one of the legendary European events of the open source community, where free beer meets lightning talks, tech lectures and hacking sessions. It’s a crowdy 2-days event in Bruxelles, with lots of open source developers having different focuses, from Linux to databases, virtualization, security or programming languages… and of course media! This year I had the pleasure to attend Saturday’s Open Media devroom program (powered by the EBU team), where I spotted interesting stuff for the media folks reading this blog. Of course, as I’m focusing on OTT and workflows, this report doesn’t pretend to be comprehensive compared to the whole day’s agenda, but you can find most of the PDF presentations on the Open Media devroom page and maybe the corresponding videos here at some point… <here>we</go>!

Kaltura platform

While they’re not an official sponsor of the FOSDEM event, Kaltura had three slots in the day to present and promote their Open Source Video Platform. First slot was about the transcoding architecture based on Mediainfo and FFmpeg, and how the Kaltura Decision Layer orchestrates the transcoding workflow. Actually the topic was a bit too thick for the 25 minutes presentation slot, so the best way to get more details on top of the presentation is probably to crawl through the “Kaltura Media Transcoding Services and Technology” KB page. Jess Portnoy mentioned an interesting solution at the end of her presentation: Kaltura’s Nginx VOD module that repackages local or remote MP4s to all ABR formats including DASH (with HEVC and CENC support), and also supports simulated live from a playlist of source files.

The second Kaltura presentation by Renan Gutman about interactive video experiences was not technical but rather an exploratory walkthrough of Kaltura Player new features like Streammersion for Oculus Rift, Viewer as the Director with video & slides navigation control, Spark conversation for live events’ Q&A sessions, or time-synced related information display. If you want to experiment with the Kaltura player, you can check the player KB page or the FOSDEM 2015 presentation which is a good introduction to the HTML5, iOS and Android libraries/SDKs. Widevine Modular DRM is supported, and PlayReady support is around the corner.

Finally, the “Develop your own media portal using Katura open source API” by Assaf Berkovitz and Rotem Haber provided a preview of what it takes to use the publishing features of the Kaltura server in combination with the Kaltura player. The community edition of Kaltura Server comes without support apart from the Community Forums – which is normal with regards to such a business model as Kaltura’s one – and the documentation is sparse, so it might be a bit challenging to implement, but it is proven to be working and that’s the essentials.

VLC 3.0

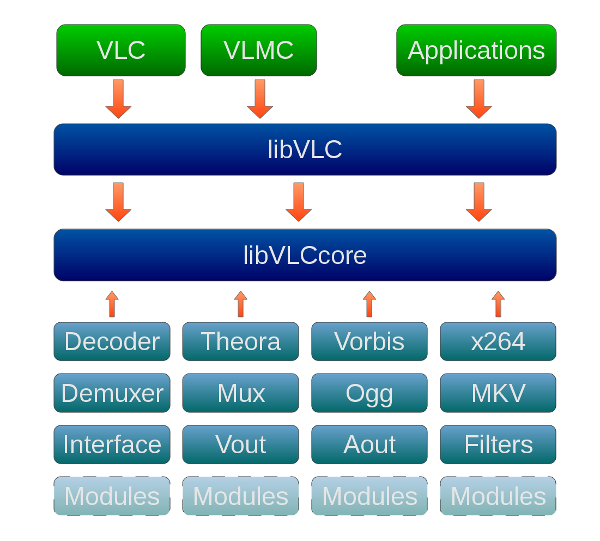

After 15 years of GPL activity, VLC is one of the veterans in the open source media space and one of the most active, a highly respected cross-platform video player available for Win/Mac/Linux/ChromeOS desktops or iOS/Android/WinRT mobiles with features parity, and now expanding in the STB/Consoles space with the tvOS and Android TV versions already published and the Xbox One coming in summer. Even a Tizen version is available, to reach the Samsung devices like Smart TVs. VLC’s leader Jean-Baptiste Kempf presented the new features of version 3.0.0, among which some are likely to interest the OTT folks around: a unified HLS/DASH/Smooth module, a HTTP/2 stack, GPU HEVC decoding and TTML subtitles support. Technically speaking, it’s fully possible to reuse libVLC to build custom ABR (compiled) players for all platforms supported by the VLC player, as libVLC is its underlying SDK. Now that the OTT features are becoming more and more mature, we might see a lot more players using the library, especially with the advent of DASH+HEVC. However, the lack of DRM support will most likely restrict the scope of these implementations to free-to-air.

dash.js and EBU-TT-D

Solène Buet was presenting a hands-on feedback from her internship at EBU in 2015, where she implemented support for the newest subtitling standard EBU-TT-D inside the open source dash.js player. Carried over ISO-BMFF, EBU-TT-D is a TTML variant which has the ambition to unify the whole video industry as the EBU-TT formats family proposes several variants for each subpart of the chain, from subtitles production to distribution and provides a bridge with the EBU-STL format which was highly popular since the 90s. EBU-TT-D has gained a significant battle as it was introduced in HbbTV 2.0 as the reference subtitling format in combination to DVB-DASH, and now the challenge is to overcome WebVTT on the web. Compared to TTML 1.0, EB-TT-D brings multi rows alignment and line padding, which is obviously not a revolution, but it leverages its advanced styling and positioning capabilities that are a strength compared to WebVTT. Will it be sufficient to take precedence over WebTT? Not sure: WebVTT’s position is strong even if the format capabilities are limited. Solène noted that despite W3C’s recommendation about text track cues, only the WebVTT Cues are available in browsers to send the data extracted by the TTML parser – a simple but not powerful option, and the display is done through a <div> tag inside the <video> tag. Thanks to her work, EBU-TT-D support has made its way into one of the major DASH desktop players, and the journey continues with the upcoming dash.js v.2.0 (currently in RC2) and IRT’s work, as accessibility/multi-language is a long-term challenge. If you want to try it by yourself, you can produce EBU-TT-D content from EBU-STL with the Subtitle Conversion Framework (SCF) that Andreas Tai from IRT presented last year, and you can segment it in DASH using MP4Box. A very good transition to the next project in this lineup…

GPAC MP4Box.js

In the 15 years old category, GPAC is another heavyweight OSS project alongside VLC. Bringing both a packager (MP4Box) and a video player (OsmoMP4Client), GPAC is expanding its footprint inside the browser and Node.js with MP4Box.js, a new project launched in 2015. This library covers a functional scope close to MP4Box’s one, allowing client-side parsing and analysis of MP4 files, on-the-fly fragmentation of non-fragmented MP4 files for MSE-based players, extract text tracks or bytes ranges. That was quite interesting to hear Cyril Concolato explaining the service worker architecture and how MP4Box SW can intercept and modify data exchanged between the browser and the server, pretty much like a transparent – but powerful – proxy. The on-the-fly fragmentation feature is quite interesting as it prevents you to deploy a specific player for progressive download playback and allows to reusing your existing DASH player for the non-fragmented contents, while avoiding the use of a specific server-side repackaging solution. That’s a pretty elegant solution working for contents in the clear, and quite in line with the new trend of players that can repackage streams on the client side, like rx-player which can transform Smooth to DASH. But what I see as the killer feature is the data extraction one, as demonstrated with the HEVC image viewer: it can extract the data from random access points and export it into BPG thumbnails. In today’s OTT world, you could also apply it to AVC data from the in-buffer DASH segments to generate the thumbnails sequence on the fly, thus avoiding all kinds of painful backend processing for this use case. The GPAC team is always ahead of the game, and this is precisely what makes this project so special.

Upipe

Many of you probably know the OpenHeadend workflow solution that can ingest live TS streams, transform it into catchup, remux it and so much more. Upipe is the flexible dataflow C framework that powers OpenHeadend, now supported by 3 companies and approaching version 1.0. Its creator Christophe Massiot was presenting the main workflow mechanisms of Upipe, the “pipes” that support one input and one output maximum but that can be combined with the inclusion of inner pipes inside a top level pipe. The pipelines construction can be dynamic to adapt to events like elementary streams structure changes, and 8 pipes types can be combined so far. Christophe acknowledged that the C library is somewhat not the easiest to integrate, which leads the discussion inside the team to support bindings to different languages like Lua with the lj-upipe project. And the Node.js integration is under examination – that would definitely be a great move forward considering the popularity of Node these days. Upipe is a really powerful toolkit with a promising track record ahead. Let’s keep an eye on it!

Nageru

Let’s conclude this report with Nageru, a new project by Steinar Gunderson. He was presenting his beta video mixer solution for Linux just before open sourcing the code. It’s a piece of software designed and optimized to run on commodity laptops with Blackmagic Intensity Shuttle HDMI and Ultrastudio SDI USB3 acquisition cards. Nageru allows to mix video sources while applying cut/fades transitions on it and controlling 5 characteristics of the live input audio tracks. The resulting mix is recorded on disk and available on a local web server as a 25Mbps live Quicktime stream (H.264+PCM audio) produced with Quick Sync – which you can then transcode on-the-fly with a solution like VLC. Overall it’s not a solution (yet) replacing commercial video mixers as there is no HDMI output video signal available as of now, but still it’s an interesting option if you want to recycle a laptop and just invest in some cheap acquisition cards to power your webcast. Here is the live demo that Steinar did during the event:

That’s it for today. I hope this blog post has given you some nice integration & forks ideas. Once again I advise you to check the FOSDEM’16 event page to get a more comprehensive view of all the video topics presented, there is much more interesting stuff there. Thanks to the FOSDEM and EBU teams for building up such an interesting sharing day, and see you there next year!